I’ve been learning a lot on Kubernetes these last couple months, and wanted to get my homelab setup to a point to makes deploying clusters easy and reliable. When I first started, I simply used minikube and docker on my desktop. However, the need for something more “robust” and “enterprise” quickly arose.

Being relatively new to Kubernetes (k8s), I first needed information. I’m in the middle of Kubernetes Fundamentals (LFS285) from LinuxFoundation.org. Additionally, I was lucky enough to have Nigel Poulton send a copy of The Kubernetes Book (Klingon Edition – seriously!) for finding the hidden Klingon in the “code” in the background of the book cover. These 2 resources will keep me busy for the rest of this year, as well as many blogs on various Linux, docker, and kubernetes steps I’ve found along the way.

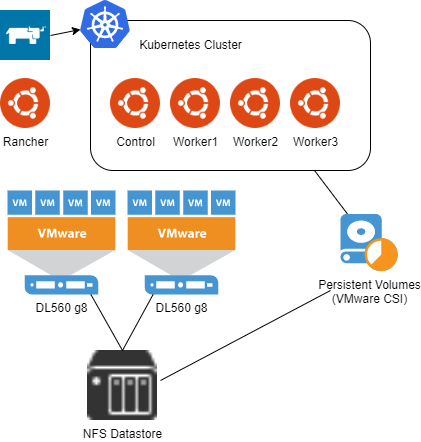

The Architecture

Originally, I thought about using Raspberry Pi’s for my k8s lab, but realized that I didn’t want to be on ARM cpu. Since I already had a VMware homelab with plenty of space, using it made the most sense. I started by deploying 4 Ubuntu VMs and then using NFS storage for persistent storage in the cluster I created. It worked fine, but my NFS storage (Synology) didn’t support the ability to snapshot my persistent volumes.

Next, I used the same Ubuntu cluster, added a second disk to each VM, and deployed Ceph for my cluster’s persistent storage. Unfortunately, I kept running into issues and in the end, abandoned that approach.

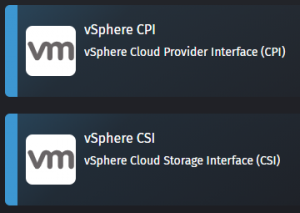

Since I had just upgraded my VMware lab to vSphere 7, I decided to use the vSphere-CSI to provide persistent volumes to my cluster. Again, out of my depth, I found it hard to navigate getting the proper taints on my existing cluster, getting the VMware CPI installed, and getting the VMware CSI installed and setup within my cluster. This led to the final solution:

Rancher.

I deployed a single Ubuntu VM, and installed Rancher on it. Then, within rancher I setup a Node Template for vSphere using my Ubuntu Template. Rancher includes a VMware CPI & CSI app in the catalog to make deploying new clusters with all the requirements for consuming VMware storage very simple.

Installing Rancher

I wanted to have Rancher exist outside any clusters I would make, so using a single Ubuntu VM made sense. And since Rancher runs in docker, it also made deployment super easy. I simply followed Rancher’s single node Docker deployment instructions.

First, I Installed Ubuntu 18.04 LTS on a VM with 2vCPU and 4gb RAM and got it up to date:

sudo apt-get update && sudo apt-get upgradeNext, was installing Docker. Rancher provides a simple script, so I decided to save a little typing and use that.

curl https://releases.rancher.com/install-docker/19.03.sh | shLast, was using a simple docker run command to get Rancher going:

docker run -d --restart=unless-stopped \

-p 80:80 -p 443:443 \

--privileged \

rancher/rancher:latestThat was simple! At this point, All I have is Rancher running in Docker on an Ubuntu VM. Now, in order to get clusters dynamically provisioned, I would need to setup an Ubuntu Template that I can deploy more VMs from.

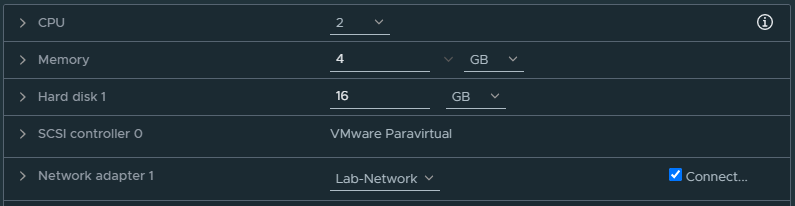

Ubuntu Template

I decided to stick with Ubuntu 18.04 LTS, so I spun up a new VM with 2vCPU, 4gb RAM, and 16gb of disk. I setup the storage controller to be VMware Paravirtual since I will be using the vSphere CSI for storage inside my clusters.

I made sure to enable SSH during the install, and then once the server was ready, I logged in and ran updates.

sudo apt-get update && sudo apt-get upgradeNext, there are just a couple things we will need to do for Kubernetes to run properly on this. First is disabling swap and making sure it stays that way after a reboot.

sudo swapoff -a

sudo sed -i '/ swap / s/^\(.*\)$/#/g' /etc/fstabNext, since I will be using DHCP in my environment instead of static IPs, I need to make sure that the Ubuntu VMs get unique addresses. Ubuntu doesn’t use the MAC address, but rather a machine id, and that’s generated during install. By running the following code, we can ensure a unique code every time we deploy from the template.

echo "" | sudo tee /etc/machine-id >/dev/nullFinally, Rancher will use cloud-init to configure the VMs it deploys from out template, so we will want to install that as well.

sudo apt-get install cloud-initAt this point, I don’t want to reboot the server anymore, otherwise I’ll lose it’s “clean” state. I shut down the Ubuntu server, and right clicked and converted it to a template.

What’s next?

In the next blog post, I’ll setup a Node Template inside Rancher as well as add a Catalog App to make the VMware storage integration incredibly simple!

Thanks for posting Tim! Sounds like you and I are both doing some of the same experiments. Great to share notes and get another perspective. I started with Rancher too but eventually settled on Kubeadm. I think there are also some strong arguments for Kubespray, but it’s a bit over my head for now, at least until I get more comfortable with Ansible. https://www.jdwallace.com/post/kubeadm

I’ve also been experimenting with CSI drivers too; but for obvious reasons have been working with Pure’s CSI driver.

https://www.jdwallace.com/post/pure-service-orchestrator

Keep sharing what you’re learning! This is great stuff.

Thanks JD. yeah, I’ve been using kubeadm for cluster creation previously. My major hang up was the vSphere CPI / CSI. This setup sim plies that portion (which you’ll see in my next post!)

Hi Tim…great post! I’ve kinda landed in the same place that you have with using Rancher as the management plane for a Kubernetes cluster.

I’m struggling with one issue though. For the Kubernetes cluster VMs (master and workers), should this have its own IP address subnet to interface with the Rancher instance? Some setups that I see run behind a NAT’ed subnet which makes it difficult to manage from other parts of the house so I would have to be at the virtual machine host. Similarly, if I were to scale the cluster to include other hardware hosts, then the subnetting issues need to be addressed. So, do you use a private subnet on VMware to manage the Kubernetes cluster and do you run the Rancher management plane with an interface into the private subnet?

You’ll want IP connectivity if using rancher to manage the clusters. In my case, rancher is on the same subnet as my Ubuntu VMs used for k8s.

NAT is possible if you have the proper ports, but I’m not a fan as it just adds complexity.

I put together a playground cluster using vagrant which works as I intended. It’s based on virtualbox rather than vmware, but that’s easy enough to change to use vmware make your setup above repeatable.

https://github.com/apwiggins/k8s_ubuntu

Now on to something more useful!

Hi Tim.

I’m currently trying to learn Kubernetes and would like to set up a home lab similar to what you describe. I don’t, however have vSphere, but I do have VMWare Workstation 15 Pro. Will I be able to build something similar?

vSphere would be required for the Rancher templates and the CSI. You may want to look into minikube for running Kubernetes on your desktop

Hi Tim, great post and and I’m slowly working through it. I am having an issue when I try to deploy the cluster “Error creating machine: Error detecting OS: Too many retries waiting for SSH to be available. Last error: Maximum number of retries (60) exceeded”. I created a generic Ubuntu Server image for the template, but I’m not sure where I went wrong. Any ides?

Did you use the cloudinit Ubuntu image or install cloudinit how I did in the post? During deployment is when it uses cloudinit to generate ssh keys to be able to deploy rancher agent and k8s.