Installation of VMware’s Virtual SAN (VSAN) couldn’t be easier. No, really!

To start with, in my lab environment, I have 4 servers that will participate in the VSAN cluster, and 3 of which will provide storage resources to the VSAN cluster.

- 3x DL160 G6 with:

- 128GB SSD

- 3x 250GB SATA drives (or larger)

- 1x DL360 G6

At this point, I have already added all 4 servers into a cluster with HA off.

Step 1: Enable the VSAN service on a VMKernel port.

- Create your virtual switches, and create at least 1 VMKernel port that will be

used for VSAN traffic.

used for VSAN traffic.- Be sure to keep the Switch names the same across hosts!

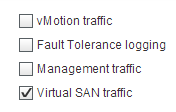

- Edit the VMKernel port and check the box for Virtual SAN Traffic.

- Save the port settings, repeat for each host.

- Time Saver: Use host profiles!

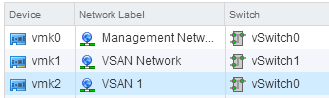

In my lab, I am using standard switches, with 2 virtual switches, each with a VMKernel port. 1 is dedicated for VSAN traffic, and the other is shared with the Management and Virtual Machine Traffic.

Step 2: Enable VSAN on the Cluster

- Select your cluster, and choose the Manage tab, and the select General under Virtual SAN.

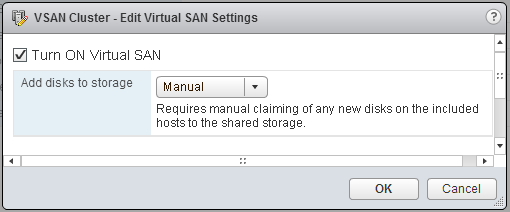

- Edit, and check the box to Turn ON Virtual SAN.

- Choose your setting for “Add disks to storage”

- Manual – You will select each disk that will be a part of the Virtual SAN

- Automatic – VSAN will select all eligible disks for you and add them

- Click OK

Step 3: Add Disks to Disk Groups

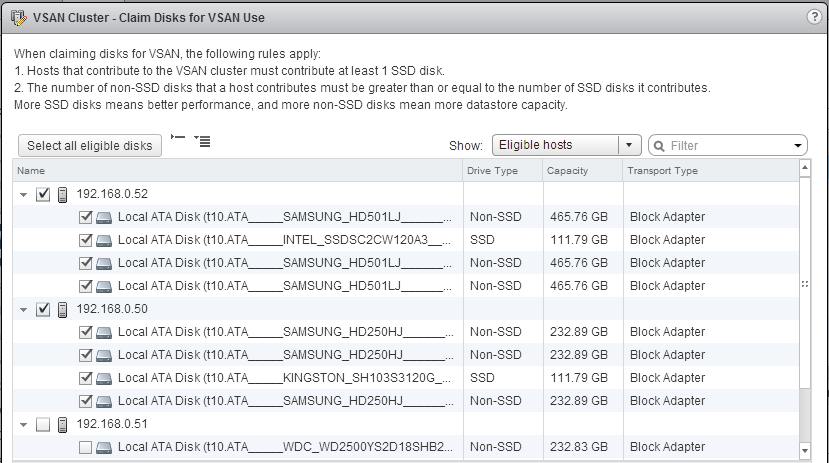

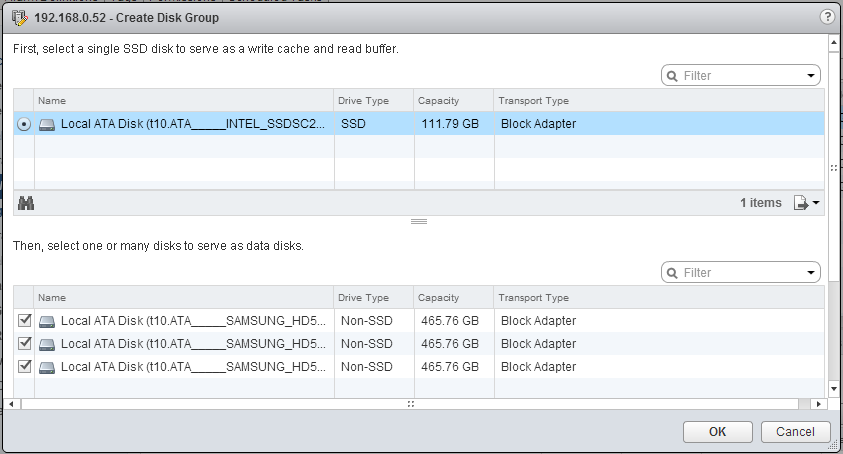

Since I chose Manual mode, I will need to add my disks into Disk Groups. A Disk Group is a collection of 1 SSD, and multiple HDD drives. You can have multiple disk groups per host if capacity allows.

- Still in Virtual SAN settings under the cluster, select the Disk Management section.

- Click on the Claim Disks button, and select the drives for use in Virtual SAN.

- Alternatively, select each host, and manually create Disk Groups per host.

Claim Disks:

Create Disk Group:

Step 4: Start building Virtual Machines!

Yes, it is that easy. You now have a datastore called vsanDatastore.

My DL360 G6 server can also access this datastore, since I enabled VSAN on the VMKernel port groups, even though it’s not providing any resources to the VSAN cluster.

Pingback: Welcome to vSphere-land! » VSAN Links

Did this happen to be with VSAN beta bits or with 5.5u1?

This was with the Beta, but the process is identical with the GA.

Right, just curious if the gen6 uses an ahci controller as it had support in beta2 bits but was pulled from the GA

Correct. I was using AHCI and was able to install since I was using beta. AHCI was pulled from the GA, and is no longer on the HCL.

Tim,

I am assuming your DL160’s have RAID controllers in them. If they do, did you configure any RAID groups for the SSD and/or HDD drives prior to installing ESXi ? Is ESXi installed on a USB drive ?

The DL160’s have pass through capable HBAs installed, so I do not have any arrays setup. It is able to pass each disk directly into ESXi so the host can see them native. I have ESXi installed on a USB drive for these hosts.

Are the data (storage) drives shown as a single datastore in Datastore Browser, or as separate datastores? Thanks.

VSAN creates a single datastore from all the storage.

Thanks. That was the only thing that was not clear to me. Going to try to implement it now 🙂

Just a curiosity. In previous steps you written: “At this point, I have already added all 4 servers into a cluster with HA off”.

After the Virtual SAN configuration, HA can be enabled again?

What happen if one cluster node goes down (hardware failure)?

I have exactly your server model and I’m starting with a new vSphere cluster but

I have a terrible doubt if is better to use virtual SAN with storage on the cluster node or use a classic schema with software ISCSI SAN (2 Openfiler HA servers connected via 10gb ethernet).

Using classic schema I’m sure that the vSphere HA, Vmotion and etc, are available but I don’t know with Virtual SAN.

Thanks!

Yes. VSAN fully supports HA, vMotion, etc. When a host fails, the VMs running on that host will start up on the remaining hosts if HA is enabled – assuming the VMs storage policy is set to default, or specifically set to tolerate more than 1 host failure. I would run VSAN, as you won’t have extra steps when entering maint mode to update hosts, etc, as it’s integrated into the kernel.

Sorry to drag this back up, but I’m curious; how much space was vSAN reporting as available for you? I haven’t quite found this out yet, but (minus the flash cache) is it the combined storage of all the nodes or a single node?

It will show the available raw storage for the entire cluster. As you provision a machine, if you set it to FFT=1 (default) then it will provision your used space x 2. So, it accurately reports free space, but depending on your policies you will consume it at different rates.

So, effectively a 3 node storage cluster with 3x750GB drives (& 3 x ‘SSD’ at 100GB each) would show at 2.25TB without fault tolerance, or 1.125TB available with fault tolerance set to 1 once fully provisioned?

Datastore capacity would show ~2.25TB. FTT is set at VM level (storage policy) and won’t affect the datastore capacity. But, if you provision a 50gb VM with default policy ( FTT =1) you will have consumed 100gb of that 2.25TB

Great thank you!

Pingback: VSAN in the Home Lab – Part 1 – Vertical Age Technologies, LLC

Pingback: VSAN 6.2 Added to the vSphere Home Lab of Squalor (Ghetto). – KeepVirtualWeird.com

Pingback: VSAN 6.2 Added to the vSphere Home Lab of Squalor (Ghetto) – KeepVirtualWeird.com

I know you need SSD for the cache space but can you use SSD for the storage space as well and not run in all-flash mode?

You can’t mix SSD and HDD is a disk group. So, using SSD in the capacity tier would be definition make it all-flash. However, you can create multiple disk groups per cluster, so if you had at minimum, 3 SSDs and 1 HDD in each host, you could create 2 disk groups. Disk Group 1: SSD (cache) SSD (Capacity). Disk Group 2: SSD (Cache) HDD (Capacity).

We have three hosts, a small SSD (120GB) for cache and a 500GB SSD for storage in each. Don’t want to mix drive types. It is for a small system where we would have no more than 12 VM’s spread over the three hosts. Just curious if that is doable? Also, as I am new to vSAN, does the ESXi OS need to reside on a third device such as another HD or USB flash?

Thank you for your response!

Yep. That would be an all flash vSAN deployment. ESXi would be installed on another storage device.

Thank you for your time and insight!

In an all flash configuration is it required to use 10GBb NIC cards?

10GB is required for all flash deployments.