Recently, I purchased a Synology 1817+ to be used as storage for my homelab. Previously, I was using an older, and much slower Synology, RS812, as well as a running vSAN on my single host. While vSAN performance was great, I ran into multiple issues trying to run on a single host, with FTT=0 and Force Provisioning set to On. Cloning, Restores, etc would fail due to trying to use it in an unsupported manner. So, enter the Synology 1817+!

iSCSI or NFS?

When I got the NAS online, I had to decide if I would go block or file. After reading many reviews, it seems the block implementation is not quite up to par. So much so, that most people were getting better performance using a single 1gb/s link for NFS, than using 4 ethernet ports and round-robin multi-pathing on iSCSI. I decided to go with NFS over a single link. At the time, I was using a raid1 pair of 6TB SATA disks, and a pair of 200GB SSD for read/write cache, and was able to fill the 1gb/s link without issue.

New SSD Added

After some searching, I purchased 4 Crucial MX500 500GB SSD drives and added them to the Synology, filling out the rest of the 8 bays. I now had 2 volumes setup:

- 1.5TB SSD Raid 5 Volume

- 6TB Raid 1 Volume with 200GB Read / Write Cache assigned

Now I have plenty of storage to run my VMs, and 2 different tiers of storage to choose from. The All Flash volume, or the Hybrid volume, and both performed quite well! I knew, However, that they could perform even better! 10gb/s will be in added to the homelab in the near future, but I wanted to get what I could out of what I had. So, NFS 4.1 seemed like the answer, since it supported multi-pathing.

Now I have plenty of storage to run my VMs, and 2 different tiers of storage to choose from. The All Flash volume, or the Hybrid volume, and both performed quite well! I knew, However, that they could perform even better! 10gb/s will be in added to the homelab in the near future, but I wanted to get what I could out of what I had. So, NFS 4.1 seemed like the answer, since it supported multi-pathing.

NFS 4.1 Considerations

First things first, Synology supports NFS 4, not NFS 4.1. VMware requires NFS 4.1 to be able to use multi-pathing. But after some reading, my 1817+ did, in fact, have 4.1, but it was disabled. Here are the steps to enable NFS 4.1 on a Synology NAS:

- Enable SSH in the Synology control panel, under Terminal and SNMP

- SSH into the box with your admin credentials

- Sudo vi /usr/syno/etc/rc.sysv/S83nfsd.sh

- Change line 90 from “/usr/sbin/nfsd $N” to “/usr/sbin/nfsd $N -V 4.1“

- Save and exit VI

- Restart NFS service with sudo /usr/syno/etc/rc.sysv/S83nfsd.sh restart

- Sudo cat /proc/fs/nfsd/versions to verify 4.1 now shows up.

Important! Since NFS 4.1 isn’t supported by Synology yet, it is not a persistent change. It WILL stay in place with reboots, but it WILL NOT stay for version upgrades. So, every time you apply a new Synology OS update, you would need to follow the above steps to re-enable 4.1. However, since my lab is running all the time, I don’t update the Synology OS unless there is a specific need.

Also, you can’t “upgrade” and NFS3 datastore to NFS 4.1 – you will have to either storage vMotion machines onto a new 4.1 share, or unmount and re-mount as 4.1 – which caused me issues.

Last, NFS 4.1 does NOT support VAAI. So, cloning, etc will result in reading and writing data across the LAN, instead of offloading the task to the NAS.

NFS 3 vs 4.1 performance

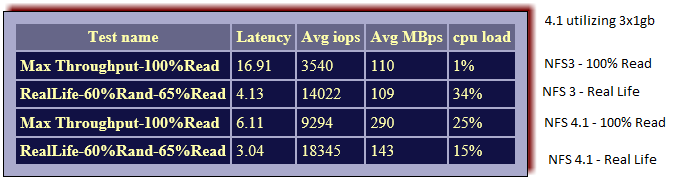

In my single host, I have 4 NICs, 3 dedicated to NFS networks. Those 3 NICs are just crossover cabled directly to the Synology 1817+. In vSphere, my host has 3 vmk ports, 1 on each subnet tied to the proper NIC to access the same subnet on the NAS. For NFS 3, only the first NIC is used. For NFS 4.1, I have entered 3 server IPs for the NFS datastore, to utilize multi-pathing over the 3 dedicated NICs. All testing is done to the All Flash Volume, and not to the Hybrid Volume.

Deploying a Single VM from a Template

I have a Windows 2016 template, about 26GB consumed, that I use constantly for deploying new VMs into my lab, and with no VAAI in NFS 4.1, I thought it would be a good start.

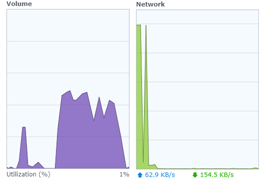

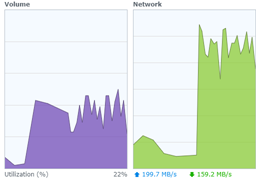

NFS 3 |

NFS 4.1 |

| The clone operation for the template took only 1m48s thanks to VAAI. As you can see, zero network traffic, and a lot of disk activity on the Synology. | Cloning took longer here, but not too much, thanks to the ability to utilize multiple NICs. 2m44s. |

Deploying Multiple (4) VMs from a Template

I noticed that I’m not even using the bandwidth of 2gb/s, let alone getting close to 3gb/s which would saturate my 3 links. I wanted to see if the single operation was the problem and if I could push more by cloning more, so I used a PowerCLI script to deploy 4 VMs simultaneously from the template.

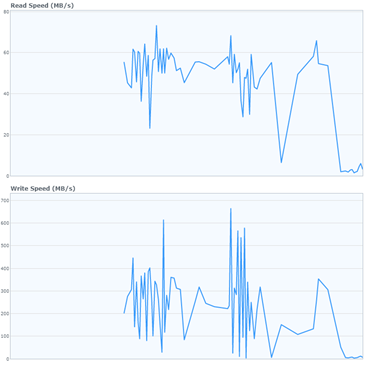

IOmeter testing

Finally, I went with the ‘ol goto, IOmeter to test. I always head over to vmktree.org/iometer and download the presets. I ran 100% Read, and the Real Life test over NFS 3 and NFS 4.1. While NFS 3 was the clear winner in cloning operations, thanks to the offloading capability of VAAI integration, NFS 4.1 was able to achieve higher IO rates for “normal” VM operations, thanks to the multiple NICS.

Clearly, the Single NIC was a bottleneck for both the 100% Read test AND the Real Life test. While we didn’t get 3gb/s worth of speed (~330MB/s) we got awfully close with the 100% Read test over NFS 4.1! NFS 4.1 is the winner in the IOmeter testing, able to achieve higher IOPs and higher throughput while maintaining lower latency.

Conclusions?

To be honest, it’s not as clear cut as I would have liked. I was torn on whether I would stick with an NFS implementation, or NFS 4.1. The obvious answer is, get a 10gb/s NIC for the Synology and the host – and that’s in the future, but months away. If VAAI were available on NFS 4.1, then it would be the protocol to choose, since it already had higher IO, and the cloning operations would be just as fast as what NFS 3 was above.

For my environment, I do deploy VMs from template quite often – maybe 6 VMs per week. I also have a large static footprint of VMs that don’t get removed and re-provisioned. But, what I do a lot of software deployment, and VM replication & backup testing, which would require higher throughput and higher IO. So, for those reasons, I decided to stay on NFS 4.1, and just wait a little longer while deploying templates.

Here’s to hoping Synology will release NFS 4.1 officially, and VMware will enable VAAI over 4.1!

Looks like NFS 4.1 is now officially available in the Synology Diskstation UI.

So I’m running into an issue with this. I cannot confirm that multipathing is actually working from a synology standpoint. ESXi shows both IPs and connects without any issue. However, under the Synology Resource Monitor you can see all connected users. I only see a single NFS connection here. I would expect to see 2 or more connections or two IPs under the one connection for the NFS connection type. I don’t believe I’m seeing the bandwidth I should see when I attempt to move files from my local volume to the NFS volume attached to my ESXi host.

I have a vSwitch with 2 vmkernel ports with separate IPs along with 3 physical adapters attached to that one vSwitch. Per VMware best practices for NFS multipathing I’m using Route based on IP hash teaming with all NICs active. I have 2 bonded NICs with separate IPs on the Synology and NFS4.1 enabled. I created the connection to one of the shares in VMware using NFS4 to both of the IPs on the Synology.

Is there any way to know if multipathing is actually working on the Synology?

I’ve enabled NFS 4.1 on the Synology and created two bonded NIC pairs with separate IPs.

On the ESXi side I created 2 vmkernel ports with separate IPs and per VMware best practices I set the NIC teaming load balancing to Route based on IP hash. Then connected to the NFS share on the Synology using NFS4 and added both bonded NIC IPs to the volume connection.

The overall speed when copying data from one volume to another doesn’t seem to really be any faster and in ESXi I only see a single vmnic being utilized, even when transfering multiple files at once. I see the overall speed balanced between both bonded pairs on the Synology side, but only see a single NFS “connected user” under the Resource Monitor. I would expect to see 2 or more NFS connections here or 2 IPs for one connection.

Just like with iSCSI, you will want to make sure that the NFS path can only use a single NIC – the easiest way to do this is to use seperate subnets. For example, I had used 172.16.1.x, 2.x, and 3.x – so I had 3 VMK ports on ESXi with 172.16.20.10, 172.16.21.10, and 172.16.22.10. On the Synology, I had 3 NICs, and each was assigned a single IP – 172.16.20.1, 172.16.21.1, 172.16.22.1.

This ensures that only 172.16.20.10 can talk to 172.16.20.1, and to no other IP on the synology. Don’t do any NIC teaming, but just assign each VMK port it’s own NIC. Onthe synology, do the same – because bonding them together is useful for many to one connections, but not for a one to one connection.

I’ll give that a shot. Generally speaking on the iSCSI side I’ve used the same network, but each vmk is setup using teaming where a specific vmnic is active and the others are in passive this way each vmnic can hit any of the NICs on the SAN.

I think I can still do that, but I may have had another networking issue in there.

So you see multiple connections to the Synology when looking at connected users?

Yea, as long as you have a point to point path, and not a point to multi-point path, it should all work. As far as seeing multiple connections, I believe you should, but I don’t use NFS 4.1 anymore, as I switched over to 10gb NFS 3 to keep VAAI

Worth mentioning – VAAI is supported with NFS 4.1 in at least vSphere 6.7. Check out this – >https://www.jameskilby.co.uk/nfs-4-1/ for an example setup.

Absolutely. At the time of the article, nfs 4.1 was not supported on Synology and VAAI on vSphere for NFS 4.1. However, both now are supported with latest Synology OS and with vSphere 6.7!

I see that sometime after the last comment in this post (by Tim, dated Apri 1, 2019), that Synology came up with a new version for their NFS VAAI plug-in:

https://www.synology.com/en-us/releaseNote/NFSVAAIPlugin

Version 1.2-1008 was released 2019-06-25 which says “Supports VMware Esxi 6.7”.

So far, I have 4 ESXi hosts all still using NFS 3. I haven’t paid much attention to this datastore for years, since it’s been working fine for my needs. However, I just saw this nice article, and after reading this article, it looks like I can go ahead and reconfigure my datastore to use NFS 4.1? I have ESXi 6.7 U3.