A ways back, Synology was kind enough to send me a DS923+ to test out. I’ve used a DS1817+ in my lab for quite some time now, so a unit with an upgraded CPU was welcome. The first thing I did was upgrade it to 32gb of ram, because that opens up the NAS to be useful for more than just storage, like running docker containers! So I thought, why not run my own AI on this 923+?

Large Language Model

To simplify it, Large Language Model is a type of AI (artificial intelligence) that is trained on a lot of text data, and is designed to generate human-like responses back to the user. The most significant would be ChatGPT from OpenAI. But, interacting with those models requires the information to be sent out to a 3rd party – what happens if you want to run everything local to your own lab? Well, you will need a platform, a model, and then a webui, and in this case, I will host it all within docker on the Synology.

Containers

First, I needed to install Container Manager on the 923+, which is very straight forward: simply open the Package Center, and search for “container” and click install.

The platform

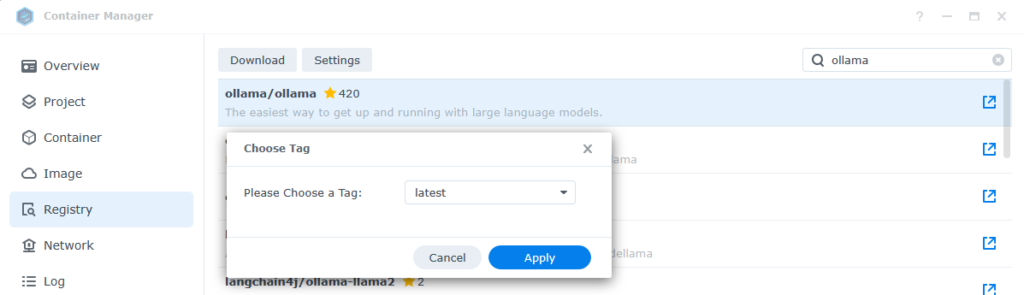

For this project, we will use Ollama (ollama.ai). Ollama is an open source project that lets users run, create, and share large language models. We will use it to run a model. In the Container Manager, we will need to add in the project under Registry. Search for “ollama” and choose download, and apply to select the latest tag. This will download the project to the local registry, but not deploy any containers yet.

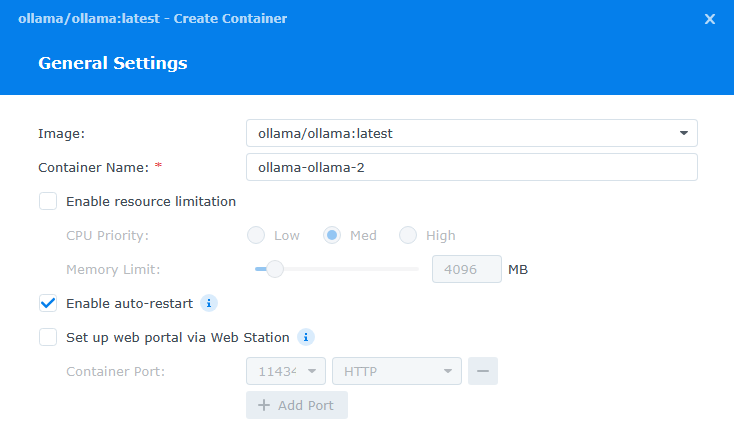

Once downloaded, navigate to Container and click Create. In the Image dropdown, select ollama/ollama:latest, and the Container name will be automatically filled in. I chose to auto-restart this container in the even it crashed or something goes wrong.

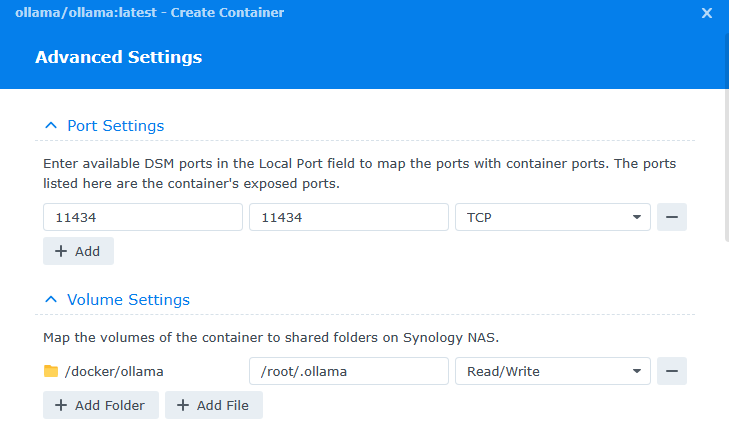

After clicking Next, There’s a couple things to fill in. First, enter in “11434” for the Local Port under Port Settings. Next, under Volume Settings click Add Folder and create a folder called “ollama” under the Docker folder. We will map this to /root/.ollama inside the container, and leave it Read/Write.

Next, finish the wizard and select to run the container. It may take a couple seconds to start.

Download a Model

Now that we have our platform, it’s time to download a Large Language Model into Ollama. Highlight the container, and select Action and Open Terminal. This will open up a Terminal window allowing us to interact with the command line inside the container. Click create, and highlight the new bash terminal that was opened.

Inside the terminal, simply type:

ollama pull llama3This will download the model llama3, which at this time is the most recent. The other options can be found here: library (ollama.com)

Now, I could simply start interacting with this ai model now using this CLI by typing ollama run llama3, however, I want a nice webui that I can use from any PC on my network easily.

WebUI

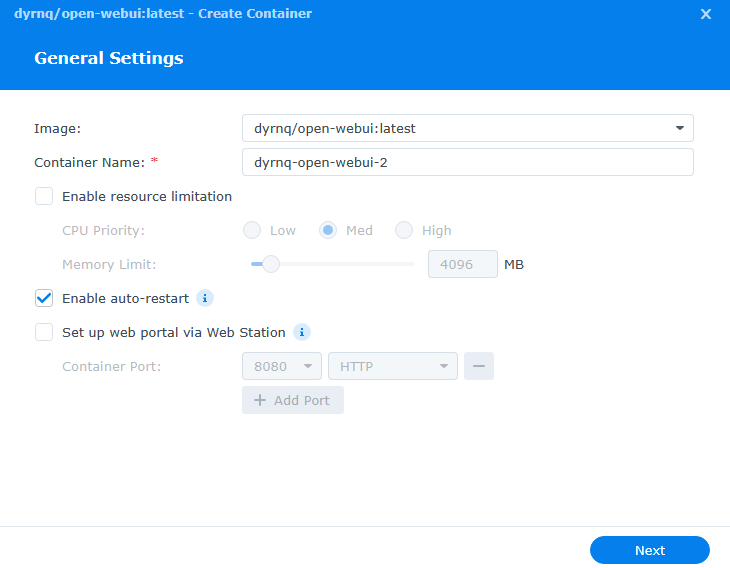

With the new AI all setup, it’s time to use a web front end, and just like ollama, this will run in a container also. Going back to Container Manager, let’s download a new image to the Registry. Search for dyrnq/open-webui in the registry and click download. Once this image is downloaded, go the the container section and click Create. For image, select the open-webui image we just downloaded, and enable auto-restart.

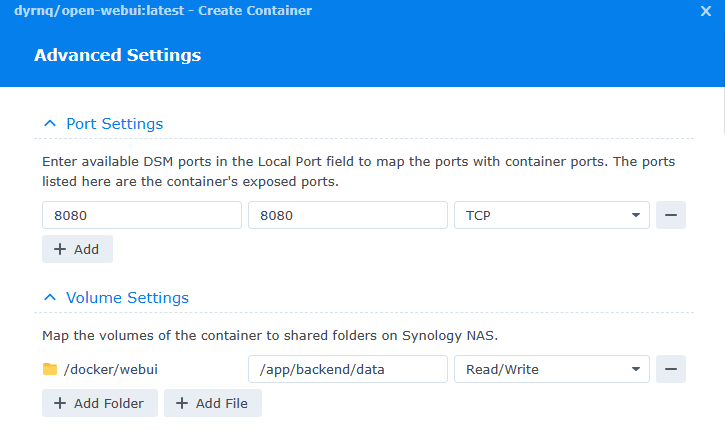

Like our first container, we have a couple of parameters to set. For the ports, I used 8080 for both the local and container port. Additionally, a new folder needs to be created to map a volume. I created a folder called webui inside my docker folder, and mapped it to /app/backened/data.

Last, under environment variables, we need to add in the url for ollama for the entry OLLAMA_BASE_URL, which i used http://192.168.1.159:11434. There are a couple other variables that don’t have entries, but they aren’t required for a lab, so you can remove them. They will highlight red when you click next, so it makes it easy to find and click the minus button beside. Then lets finish and choose to run the container.

Private AI complete!

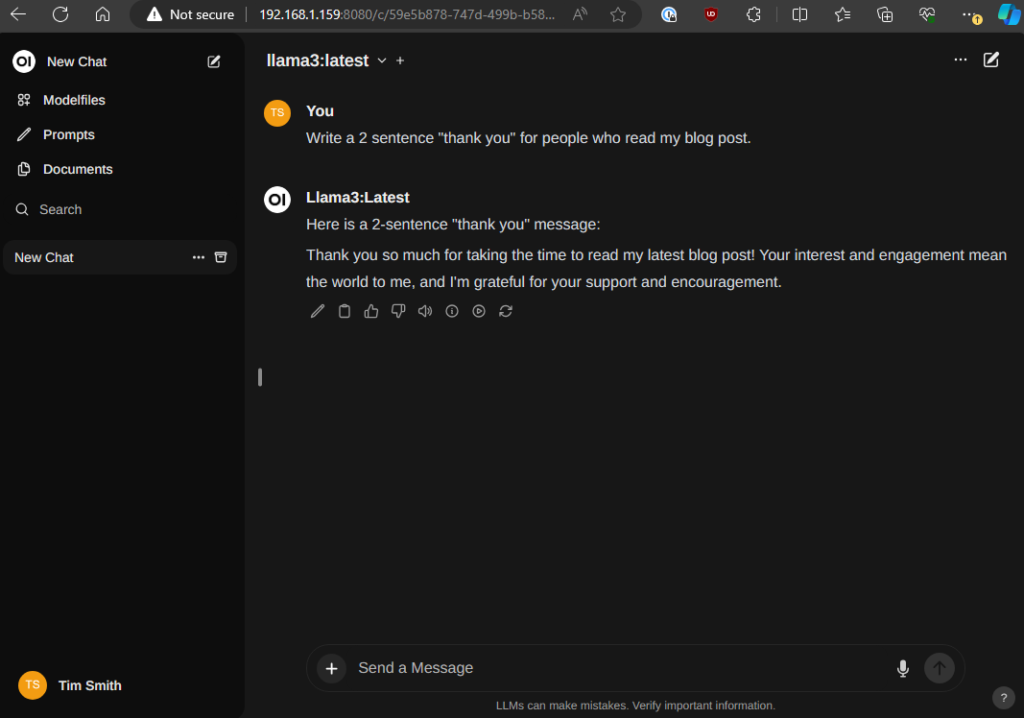

Once the container is running, I opened up my web browser to my http://192.168.1.159:8080, and was greeted with the openweb ui. The first time, you will need to register a user account. This is stored locally, and this first user will be an admin. At the top of the window once logged in, you can select a model – and since only 1 was downloaded, llama3 is the only model in the list. Now, you can ask your AI anything!

I’m having trouble getting the web UI to function and would like to discuss some items you mentioned in the document. First, when creating a folder for Ollama, you refer to it as “/root/.ollama.” Did you mean to put a “.” in front of “ollama” to make it a hidden file? Secondly, the OLLAMA_BASE_URL is assigned the variable “ollama.” Should this variable be set to “http://192.168.1.159:11434/ollama” or should I remove the “/ollama” at the end?

Despite following the steps carefully, I’m still unable to access the URL. From the log on the web UI, I see the error message “ERROR:apps.ollama.main:Connection error.” Do you have any thoughts on what might be causing this issue and why I can’t access the URL?

Yes, the “.” in front makes it a hidden file. And yes on the url ending in /ollama.

Thanks for the detailed post! Sadly, I can’t get anything to happen. I have Llama installed. I see it in the picker, I select it, but when I try to ask it something… nothing happens.

Thoughts on how to troubleshoot?

Thank you for a great guide! Almost have it working, I just can’t select a model. I did pull llama3 through bash but it’s not available in the dropdown in Open WebUI. Odd.

I ran into the same issue. Haven’t found a solution.

Hey, i get this error. Ollama: 500, message=’Internal Server Error’, url=URL(‘http://192.168.0.2:11434/api/chat’). I can confirm the ollama is running at 11434, but at http://192.168.0.2:11434/api/chat I get 404 page not found. Where’s the problem ? thanks in advance

Unfortunately you’ll have to look at the terminal output of your container to see what messages it’s showing.

I am having the same issue. Also fails when I work in terminal.

Hello,

I’m a bit confused about the use of the local IP in this tutorial. Is the http://192.168.1.159 IP you mentioned the same IP as your Synology NAS?

Yes on this case, that was the IP of my nas

How can I make this IP public? Suggestions?

Thank you! I can start Web UI and everything looks great! But there are no model to choose. How can I get another model? I did download the model like in the instructions.

I must have removed OLLAMA_BASE_URL by mistake. Added that again and everything works now. Thanks!

It works, but terribly slow. 1 minute to answer a query …. Also have 32 GB in DS 923+, for every question CPU peaks at 250% and memory goes to 11 GB.

@Tim did you do any tweaking to optimize speed?

No. Unfortunately it’s very cpu intensive. And since there’s no GPU, it’s slow running on the cpu.

I ended up using this tutorial to configure open webui to point to a more powerful PC running Ollama on my local LAN. The results are much better FWIW. The main pitfall was changing Ollama on the PC to run on 0.0.0.0 instead of localhost.

That’s a good idea. I ended up moving mine to a machine with a GPU, but moved everything. I may switch back to having the synology run the front end.

Max and Tim, I would love to see this tutorial if you happen to have it handy! Not very technically knowledgeable myself, but my understanding is your objective is to host the LLM on Synology while routing the actual request to your PC (with a GPU) for processing?

Hi Max! Would love to see this tutorial if you have it handy! Had this exact same thought, but haven’t found anyone who’s executed it yet other than you two! Looking to securely allow connections to OpenWebUI hosted on Synology with the actual processing and compute handled by the GPU on the host PC and Ollama. Almost like a middle man!

Following up here, to clarify, my understanding is that you’re running the OpenWebUI as it’s own container and essentially just pointing it’s Ollama host to 0.0.0.0? Pretty sure that’s just a few simple changes in Ollama and the WebUI if I remember correctly? Super smart!

It’s fun because the Synology is good at running the frontend only. I’m also going to try out Tailscale this weekend so I can talk to openwebui while I’m out of the house.

Nice! I use cloudflare tunnels in my homelab to expose tho ha externally securely.

I was thinking that it would be awesome to see a tutorial on how to augment a Synology hosted Open WebUI to run Image generators like Stable Diffusion along side Ollama LLMs. I’m not sure how straightforward this is as I’m not sure if the Open WebUI config can point to more than one running server IP.

Thanks for this tutorial. I am trying to run ollama pull llama3.1 in the bash but have this error: Error: pull model manifest: Get “https://registry.ollama.ai/v2/library/llama3.1/manifest/latest”: dial tcp —–: i/o timeout.

I’m running ollama on my local PC in the LAN (192.168.1.44) and Open WEBUI on Synology NAS (192.168.1.144). I’ve specified OLLAMA URL to match my local PC IP address, but looks like Open WEBUI can’t access it?

Is there any way to configure it to connect to remote ollama server?

Thanks!

Yes, it’s just a matter of using that up instead of 127.0.0.1 like my example

Quick question: We’re looking to leverage something like this with a large set of unstructured data sitting in an existing shared volume on an in-house Synology server. Is it just a matter of mapping the container volume to the existing shared data store on the box?

After following the steps, I got a blank white screen when trying to access the WebUI.

Turned out that the config as described above (thank you @Tim!) was correct, I just needed to wait. For half an hour or so. That was unexpected, but it works, albeit slow.

Thats neat … Is it possible to give it some folder for file access? It would be nice if I could give there a file and it would create translated version with new file name provided.

That’s a good question and I’m unsure. I imagine it may be possible

Hello Tim,

Finally I found exactly what I was looking for.

Amazing instructions, I think chatgpt copied you because it gave me the same steps.

Any how, I will investing in the same set up but you know how expensive those hard drives are.

My question to you would be: Can I start with two 16T hard drives and expand as need without messing up the configuration?

Great!

Yea you can expand from 2 to 4 drives. Just use SHR for the redundancy and it will handle the extra drives later on.

Hi,

How are you able to run on Synology? The container overview shows CPU 400% utilisation when running Ollama 🙂

Great Instructions

Did it all correctly

Cannot connect

192.168.1.159 took too long to respond is what I get. though it has now been running for 48 minutes.

192.168.1.159 is a private IP used in my lab, you would need to connect to whatever IP address you are using for your deployment.

Thanks for this great manual, but I have the problem, that I also can’t select a model. The download seems to be successful, because in the terminal is the text “writing manifest –> success”.

And when I open the IP with the port :11434 there is “Ollama is running” in the browser.

hi, you have a great tutorial !

may i ask for some help to change to GPU due to the CPU high consume !!

How to update the Open-webui docker container to pull new versions without losing previous config done inside the Open Web UI Admin panel?

Thanks for this great article!

You have a typo in your tutorial when creating the WebUI Docker Container. WebUI is stored at “/app/backend/data” (not mapped at “/app/backened/data” as implied by you). Was wondering why no data was stored in my save directory and everytime I needed to create a new account.

thanks for the catch!

For those of you who want to run a LLM on your NAS but you don’t have the computing power, you can setup ollama/openwebUI and use an API from https://openrouter.ai/ or https://deepinfra.com and run large LLM’s for 1-2 dollars a month. For example you can run Llama-3.3-70B-Instruct-Turbo for free from openrouter (but free isn’t a priorty for speed) or on deepinfra for $0.038/$0.12 in/out Mtoken I’ve been using them for months, and I think I’ve racked up ~.25 cents in fees. (though I mostly use my own home hostLLMs.) But at the cost of eletricty, I think I’m going to switch to full use. I hope this helps.

Thanks, works for me on DS1621+